Käsittelen tässä jutussa Fujifilm Instax EVO Mini kameraa ja Canon Selphy CP1500 tulostinta. Tavallaan teen niistä hieman vertailuakin, vaikka kameran ja tulostimen vertaaminen toisiinsa voikin vaikuttaa ensi kuulemalta hieman oudolta.

Ennen kuin etenen alkua pidemmälle, täytyy kuitenkin katsoa hieman historiaan. Aloitin itse valokuvauksen erilaisilla halvoilla, muovisilla kameroilla 1970-luvulla, ja 1980-luvun alkupuolella sain hankittua ensimmäisen “oikean”, vaihdettavilla linsseillä varustetun järjestelmäfilmikameran. Muistan muovikameroiden lelumaisen olemuksen, liikkeessä kehitettyjen paperisten värikuvien värivirheet, epätarkkuudet, valovuodot, naarmut ja hauskasti poksahtelevien kertakäyttösalamoiden tuottamat räikeät valot ja varjot.

Koko vuosikymmenien valokuvausharrastuksen ajan olen tottunut pyrkimään kohti teknisesti parempilaatuista kuvaa. Valovoimaisemmat, terävämpään kuvanlaatuun kykenevät linssit ja kamerat ovat seuranneet toinen toistaan. Varsinkin digitaalisen kuvauksen aikakaudella kehitys on ollut hyvin nopeaa: ensimmäiset sumuiset “postimerkkikuvat” varhaisista 2000-luvun alun digipokkareista alkoivat korvautua yhä tarkempien kennojen ja korkealaatuisempien linssien tuottamilla, yksityiskohtaisilla ja sävykkäillä, värikylläisillä valokuvilla. Paremmat säätömahdollisuudet kehittyneissä kameroissa ja lukuisat linssivaihtoehdot tarkoittivat paitsi teknisten myös taiteellisten mahdollisuuksien laajenemista.

Polaroid-yhtiön 1970-luvun alkupuolelta lähtien hallitsemat pikakameroiden ja -filmien (instant camera) markkinat menivät itseltäni aikanaan täysin ohitse – meille ei koskaan hankittu tuollaista, kalliille erikoismateriaalille kuvaavaa laitetta. Vuoden 2010 tienoilla aloin kuitenkin huomata, että varhaisten älypuhelinten rajoittuneita kameroita oli alettu käyttää jäljittelemään 1970-luvun halpakameroiden ja pikakameroiden saturoitunutta ja suttuista estetiikkaa. Muistan kokeilleeni varhaisten valokuvasovellusten kuten Hipstamaticin ja Instagramin filttereitä, mutta en oikein saanut kiinni niiden ideasta. Halusin omien kuvieni olevan selkeitä, teräviä ja muutenkin optisesti mahdollisimman korkealaatuisia. Se, että valmiiksi heikkotasoisten matkapuhelinkameroiden tuottamia kuvatiedostoja edelleen manipuloitiin jäljittelemään halpojen filmikameroiden valotus- ja värivirheitä ei tuntunut järkevältä.

Nyt 2020-luvulla huomaan edelleen painiskelevani samojen esteettisten kysymysten parissa. Onko teknisesti edistynein kamera välttämättä aina automaattisesti “paras”? Onko jossain tilanteessa epätarkka tai muuten teknisesti “viallinen” valokuva kuitenkin mielekäs esteettinen valinta?

Vanhoista, filmiajalta jatkaneista japanilaisista kamera-alan yrityksistä Fujifilm on systemaattisimmin vaalinut filmikameroiden tyylistä käyttökokemusta ja kuvaestetiikkaa. Osana laajempaa liikehdintää nykyaikaisesta tehokkuusajattelusta irtautumiseksi, filmivalokuvauksen harrastus, vanhat kamerat ja linssit, sekä niitä jäljittelevät, “retroesteettiset” tuotteet ovat kasvattaneet viimeisen vuosikymmenen aikana selvästi suosiotaan. Itse olen kuvannut yli parikymmentä vuotta jo lähes yksinomaan Canonin kameroilla, mutta olen seurannut kiinnostuneena etenkin Fujifilmin X-sarjan kehitystä. Perinteiset fyysiset säätörullat valotuksen, aukon ja herkkyyden säätöihin on käytettävyyden kannalta järkevä ratkaisu, mitä toivoisi muidenkin valmistajien seuraavan. Perinteistä jykevältä näyttävää metallia ja mustaa maali- tai nahkapintaa ulkoasussaan yhdistelevät kamerat ovat myös kieltämättä tyylikkäitä.

Itse en ole koskaan ostanut Fujin digitaalikameraa, sillä Canonin enemmän työkalumainen lähestymistapa on sopinut paremmin omaan harrastukseeni. Se, miltä kamera näyttää, on kuitenkin toissijaista jos pääosin vaeltelee yksin sumuisilla järvenrannoilla tai ryteikköisissä metsissä. Kaunis, korun kaltainen kamera on ehkä enemmän omillaan tuomassa omistajalleen iloa kaupunkilomalla, perhejuhlissa tai vaikka uimarannalla – kuin luontokuvaajan piilokojussa.

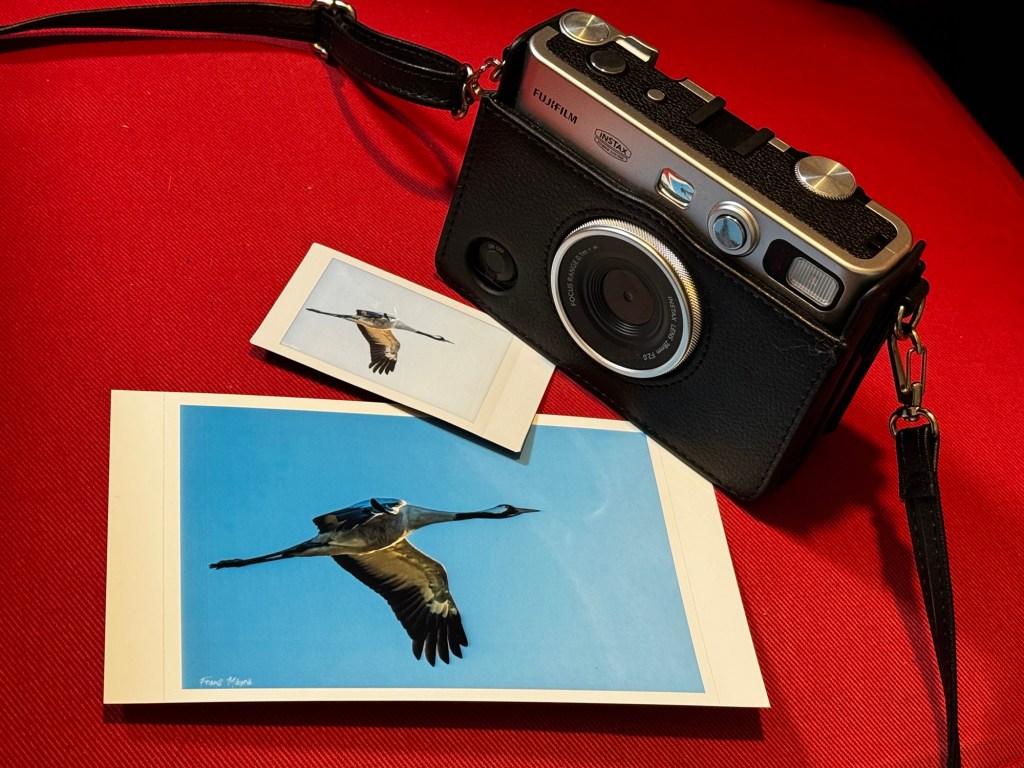

Sain kuitenkin taannoin joululahjaksi Fujin Instax EVO Mini -kameran, ja olen opiskellut sen käyttöä ja perehtynyt hieman enemmän pikafilmi-tyyppiseen valokuvaestetiikkaan. EVO Mini ei ole puhdas pikakamera siinä mielessä, että se tuottaisi jokaisella laukaisuilla analogisen kopion fyysisenä paperikuvana. Fuji on Instax EVO -kameraperheessään yhdistänyt “filmisimulaatioihin” ja filttereihin keskittyvän kuvausestetiikan hybridikameraan, missä on sisäänrakennettuna kekseliäs digikameran ja instax-valokuvapaperille printtaavan tulostimen yhdistelmä. Kameran fyysisiä rullia voi siis käyttää halutun retroesteettisen vaikutelman säätämiseen, ja perinteinen “filminsiirtovipu” puolestaan tulostaa valitun digikuvan paperiversioksi. Syntyvä paperikuva on pienikokoinen (62 x 46 mm) ja melko epätarkka (max. 1600 x 600 kuvapistettä – älypuhelinsovelluksesta tulostettuna vain 800 x 600 pistettä).

Instax EVO -kameraa on kuitenkin hauska käsitellä ja syntyvät pienet fyysiset paperikuvat ovat etenkin sopivassa sosiaalisessa tilanteessa mainioita. Valokuvatekniikka ei ole tärkeintä, jos halutaan vain vangita hetken tunnelma ja saada kouriintuntuva muisto, jonka voi antaa vaikkapa juhlien osallistujalle heti mukaan.

Teknisesti “huono” kuva on myös tietynlainen ajatustesti valokuvaharrastajalle: mikä tässä harrastuksessa lopulta on tärkeintä – kuvien ottamisen prosessi ja tapahtuma, hetken kokeminen ja tunnelma, vaiko tekninen lopputulos? Tällaiset kysymykset ovat koko ajan keskeisempiä, kun digitaalisten kuvien editoinnin ja muokkaamisen mahdollisuudet ovat nousseet ehkä yhtä tärkeiksi – tai valokuvalajityypistä riippuen jopa tärkeämmäksi – kuin puhtaasti valoilla, varjoilla ja sommittelulla luotava “raakakuva”. Myös tekoälytekniikat haastavat valokuvaajaa: jos tietokoneohjelma synnyttää ehkä jo teknisesti loistokkaampia ja näyttävämpiä lopputuloksia kuin mihin edistynyt harrastaja tai kenties ammattilainenkaan pystyy – ja muutamassa sekunnissa – niin ehkä “valokuvan aura” (sen ainutkertaisuuden ja läsnäolon tuntu, tässä siis Walter Benjaminia mukaillen) on lopulta merkittävässä suhteessa myös erilaisiin epätäydellisyyksiin ja nopeassa hetkessä elämisestä kertoviin jälkiin itse kuvassakin? Näyttää että osa valokuvaharrastajista on esimerkiksi suorastaan kyllästynyt viettämään yhä enemmän aikaa tietokoneen kuvanmuokkausohjelmien äärellä, ja on sen takia siirtynyt analogiseen tai hybridityyppiseen pikakameraan.

Itse en ole ehkä (vielä ainakaan) valmis täysin hylkäämään teknisesti edistyneitä järjestelmäkameroita ja kuvien jälkikäsittelyyn panostamista. Mutta on mukava että tällainen, selkeästi erilainen kamera on tarjolla vaihtoehtona. Käytännössä Instax Mini -kameran käyttömahdollisuudet ovat kuitenkin hyvin rajattuja, ja omasta mielestäni selkeämmin hupikäytön ja sosiaalisten tilanteiden ohjelmanumeron puolella. Edellisessä blogikirjoituksessani (linkki) mietin vanhempien, digitaalisten kompaktien kameroiden (esimerkkinä omat Sony RX100 taskudigikamera ja Canon EOS M50 -järjestelmäkamera linsseineen) kiinnostavia mahdollisuuksia kevyesti mukana kulkevina, mutta silti laadukkaina valokuvausvälineinä.

Raskaan ammattitason järjestelmäkameran ja linssin ainoa vaihtoehto ei ole Instax tai Polaroid-tyylin pikakuvaus, vaan nopeampia ja keveämpään kuvankäsittelyyn nojaavia “pokkarikuvakulttuureja” voi niitäkin olla monia erilaisia. Yksi mielenkiintoinen apuväline tässä suunnassa avautuu pienestä “kymppikuvatulostimesta”, jollainen itselläni on ollut Canon Selphy CP1500. Tässäkään tulosteen resoluutio ei ole kovin korkea (300 x 300 dpi resoluutio – siis joka tapauksessa huomattavasti Fujia suurempi kuvapistemäärä), mutta käytössä on värisublimaatio-tulostus, missä lämpösiirtotekniikalla päällystetään jokainen tulostettava kuva neljällä eri kerroksella (kolme perusväriä, CMY, sekä kiiltävä suojapinnoite). Canon lupaa arkistokelpoisten kuvien säilyttävän värinsä 100 vuotta, mikä tietysti kuulostaa hyvältä. Tulostimen tuottamat kuvat ovat laadukkaita ja soveltuvat ammattikuvaajallekin vaikkapa muotokuvasession nopeiden “pinnakkaisten” tuottamiseen.

Itse olen kokenut että mahdollisuus kuvata erilaisilla kameroilla kulloiseenkin tilanteen sopivalla tavalla on järkevää ja hauskaa. On hienoa kun voi tuottaa joko suoraan kamerasta editoimattomia tai “filttereillä” tehostettuja kuvia, tai luoda kuvia älypuhelimella, tai toiseen tarkoitukseen pitkällä ammattilinssillä, toisinaan makrolinssillä ja järjestelmäkameralla, toisinaan pokkarikameralla, älypuhelimen kameralla, ja sitten muokata niitä tarpeen mukaan joko tietokoneella tai puhelimen sovelluksella. Ja on hyvin näppärää jos kaikki nämä erilaiset kuvat on mahdollista halutessaan myös joko jakaa eri verkkosivuistoille, sosiaaliseen mediaan tai blogiin, tai vaikka tuottaa niistä se pienempi tai isompi paperituloste. Kaikki tämä tukee ideaa että valokuvaus on hyvin monimuotoinen ja joustava taidemuoto, harraste, sekä käsityötaidon ja itseilmaisun kanava.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/3423214/7024888627_3257c520fd_k.0.jpg)

You must be logged in to post a comment.